Some thoughts about integrating documentation, modelling and publication for historical 3D models

by Tijm Lanjouw, 4D Research Lab, University of Amsterdam

One of the main questions of the PURE3D project is how we can publish 3D models in a way that warrants their transparency, which is an issue of high concern in the virtual heritage research community (e.g. Bentkowski-Kafel et al. 2012). In the 4D Research Lab (4DRL) we create historical and archaeological 3D reconstructions of past places and objects. An important aspect of transparent publication is that such reconstruction models are honest about the processes of their creation. These are complex spatial and material models based on a heterogeneous set of data, sources and interpretations. Ideally, models should include metadata, but especially paradata about the base data and its quality, about historical sources and how they are used, and the type of reasoning that has been applied to fill in gaps. This information should be documented somewhere and published alongside the model, whether it is used as a space for analysis, or as popularising academic output.

At the 4D lab, our current solution is a report series as a definitive publication about the genesis of our models and the insights they generated. In the discipline at large, various initiatives have tried to create metadata standards for virtual heritage models, the results of which are neatly summarised in PURE3D’s recent project report. Such standards offer metadata definitions and schema, but leave it up to the user on how to sort out the problem of organising the actual creation of the meta- and paradata. Especially lacking is an open discussion about how this should be practically implemented in modelling workflows.

By modelling workflow, I mean all the nitty-gritty work of collection, study and analysis of images, field data, articles, previous models, and then converting it into a digital 3D model by means of a plethora of technical 3D modelling tools, and various types of thinking and interpretation. In my case, but I believe this is more generally so, much of the source data and documents are simply stored alongside the model on a hard drive as separate files in a hopefully well-organised folder-structure. I work a lot with notes and referenced sources (e.g. images) and data (e.g. pointclouds) placed inside the modelling program (Fig. 1). The problem arises, however, about whether or not the model is to be published and the sources and interpretations are to be integrated in the model as annotations. If so, this would require a sizeable effort of reconnecting all this information. Time we often don’t have.

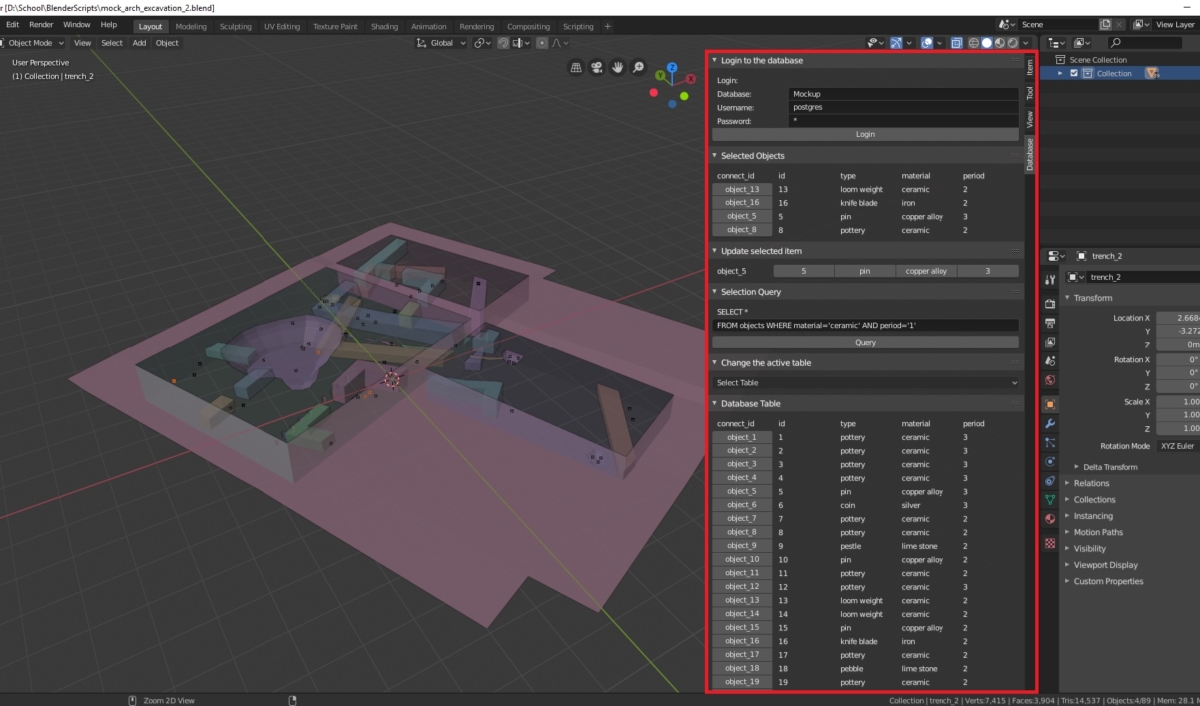

Figure 1. Example of a workspace in Blender, showing various layers of information (raster images and models), annotations in the 3D viewport, colour coded reconstruction certainty classification, and with paradata administration being kept in the text editor of the program.

To decrease the time spend on administration, and therefore lower the threshold to completely publish a model, it would be ideal if all source references, associated paradata and annotations are automatically saved at export. The challenge is therefore to more closely integrate the model made in the 3D modelling software with the final published model. How can we accomplish this? In this blogpost I’ll discuss a few ideas based on existing solutions.

3D information system

We should start by changing our perception of a 3D modelling program purely as computer graphics software. Analogous to GIS, a 3D modelling program can be an information system (‘3DIS’), with various types of data overlaying each other, and vector objects or features that have associated datasheets (‘attribute tables’) that are internal to the file format (e.g. ‘shapefiles’). Moreover, GIS systems are known for their database connection capacity, which allows for linking of other data to objects. Such features are not present by default in 3D modelling programs, but are generally possible by writing custom extension. This is not a new idea at all as, for example, Hermon & Nikodem (2008) have already created a database plugin to Blender for visualising different degrees of reliability of the reconstruction model. However, within historical or archaeological 3D modelling, this practice is nearly non-existent. Implementing this kind of addition does not have to be such a big effort – as demonstrated by a BA project that a computer science student did with us in the lab (Schrijver 2019). In the project, a postgresql database was connected to an archaeological model of 3D point and vector data that could be edited and queried directly in the Blender 3D modelling program. The ability to do database entry inside the modelling program, and to connect this directly to newly modelled objects, means a significant streamlining of the modelling and administration procedures, and creates a close connection between model and paradata.

Figure 2. PostBlender, a postgresql database interface for Blender, developed by Gijs Schrijver for a BA computer sciences project in 2018. Displayed is a model of an archaeological excavation mock-up and associated database.

BIM

The system outlined above may appear very similar to something else that already exists that may be a source of inspiration: Building Information Model, or BIM. BIM models are used widely in building design and construction. BIM software allows for both the creation of 3D objects, and the authoring of their metadata. For sharing across platforms, the metadata, file structure and naming conventions use an open international standard (ISO 16739-1:2018). Although BIM’s primary purpose is not publication, there is a universally accepted file format (.ifc), and web viewers exist for .ifc models, that enable access to both the model and the metadata. Unfortunately, the IFC standards are not directly applicable to historical or archaeological reconstruction modelling and attempts to use it have shown its limitations. But a BIM implementation tailored to the demands of heritage documentation, called HBIM, has already been introduced. It does exactly this: the integration of various sources of information from scan data, to derived models with metadata and associated documents. So HBIM might be the way to go. Unfortunately, the open-source project seems to have stalled and there is no documentation about how to use it. Moreover, the problem of lack of integration with 3D graphics programs remains.

So why not simply use a GIS or a BIM editing program?

This is a question that lingers often underneath discussions about the best approach to historical/archaeological 3D modelling. Since GIS and BIM are basically information systems that are built around the idea of integrating graphical components with (meta)data, why do we even use computer graphics software such as Blender, 3DS max or Cinema4D? In the first place because it offers a degree of freedom with regards to freehand design and 3D modelling that is not available in GIS and BIM. This means that 3D graphics programs are much more attuned to the experimental approach to 3D reconstruction. It allows for the rapid creation and comparison of hypothetical reconstructions, in the process of which a better understanding of the subject is formed. It is, moreover, the better choice for preparing the model for photorealistic renders, or when VR, AR, web, or game environments are required. And the latter become increasingly important, even as research output. In short, there are very good reasons to stay with 3D graphics programs, and to try to improve and standardise ways that they interact with databases and other externally saved data.

A file format for publishing historical 3D models?

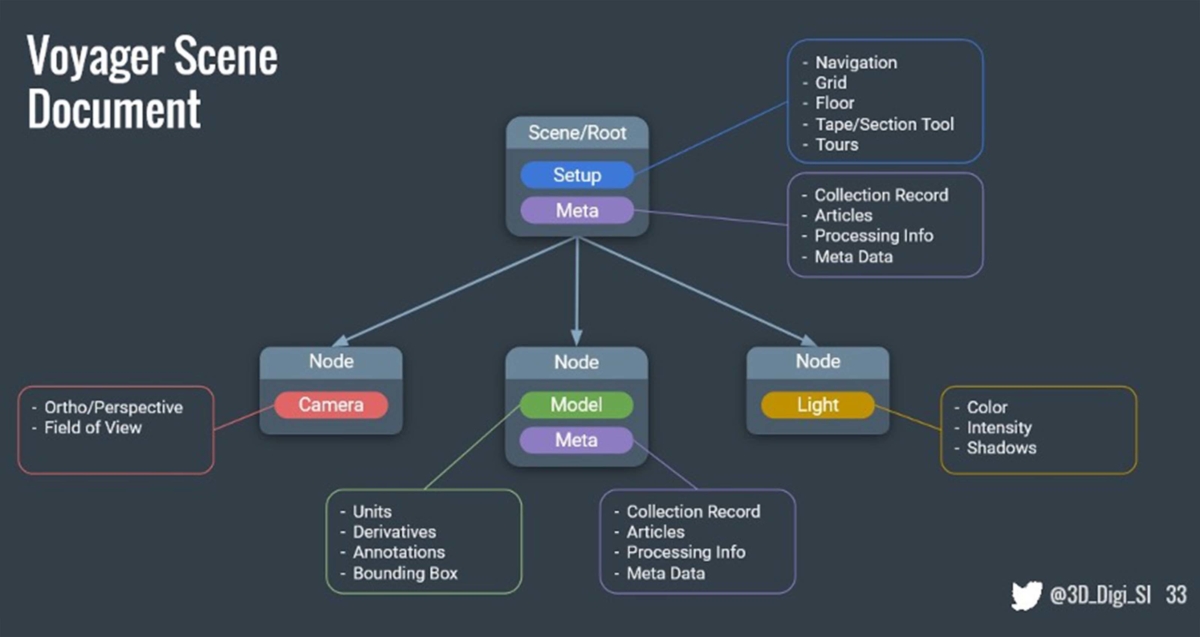

What we may need is a specific file format for historical 3D models. And that is not such a big obstacle as it may seem. Already existing 3D file formats can, for instance, be extended. Files containing 3D formats come in two types, one binary and one readable by humans. They are relatively simple files that list the location of corner points, faces and edges in a custom coordinate system. However, they can optionally contain information on the normal (direction) of vertices and faces, materials, lights, animations, coordinates for texture mapping, and links to external texture files. This means that such files already contain many different types of optional information. Open file formats such as .obj or the more advanced .gltf and .x3d are easily readable and extendible by simply adding extra lines of information. The file format developed by the Smithsonian in the context of their Voyager web viewer for 3D models of artefacts is a good example. The Voyager JSON document format, SVX, is basically structured as the well-known .gltf, but adds features for storing metadata and linking to articles or processing info. Although the SVX is designed to be used within the Smithsonian eco-system for processing and displaying 3D models, existing .gltf exporters in 3D modelling programs can be modified with relative ease to also write the metadata. Another interesting innovation is that the work by the Smithsonian extends way beyond being a viewer and a file format, as they created an entire 3D processing pipeline with many often-used tools, collectively called ‘Cook’. The efforts of the Smithsonian go a long way towards integrating processing (or modelling) with publication of the results and metadata.

Figure 3. Voyager Scene Document, an example of a 3D file format that allows storing metadata and linking to paradata (https://smithsonian.github.io/dpo-voyager/document/overview/).

Another approach would be to take the example from GIS file types like a shapefile, which is represented by a related set of files: one containing the geometries, another the georeferencing and another an attribute table which is expandable to any number of fields with various datatypes (text, dates, numbers, etc.). One of the strengths of this system is that the possibilities are endless in connecting attribute table fields to data in external databases, which is one way to create large, linked datasets. Being able to edit attribute table data inside 3D graphics programs, and exporting the attribute table alongside the 3D models would solve much of the meta- and paradata authoring and publishing issues. Although ESRI’s 3D shapefile meets these requirements, the problem is that no 3D graphics programs support it, and the vertex modelling and texturing tools of ArcScene are too limited.

Conclusion

If we want to encourage academics and others to publish 3D models including source references, data and other documentation, a system should be created that decreases the amount of effort needed to prepare the project files such as source data and paradata documents. Without a framework that facilitates this, the threshold will remain too high. Since a lot of the work creating paradata occurs during modelling, we should start to think of an ecosystem that integrates the graphics programs with source data organisation and a publication platform. In this blog post, I proposed that one solution is to work towards a 3D information system with a custom file format for historical or archaeological 3D models that can also store the required metadata. Additionally, we must improve the integration of 3D graphics programs with GIS, for instance by sharing a database of models, paradata and linked data. This would be a significant optimization of the workflow, allowing more researchers to exhaustively publish their models, thereby improving the transparency of their products.

Literature

Bentkowska-Kafel, A., Denard, H., & Baker, D. (2012). Paradata and transparency in virtual heritage. Farnham, Surrey, England: Ashgate.

Waagen, J., Lanjouw, T. (2021): 4DRL Report Series. University of Amsterdam / Amsterdam University of Applied Sciences. Collection. https://doi.org/10.21942/uva.c.5503110.v1

Schrijver, G. (2019). PostBlender: een bi-directionele koppeling tussen een GIS database en Blender. Unpublished BA-thesis, University of Amsterdam.